The fifth part of this blog series about the development of our demo. This part is focused on the demosystem and the structure of the effects but not the effects themselves.

#Introduction Developing a demo can be done many ways. The basic reason for a demo is showing the skills of the team, code, graphics, music, direction, etc.. There are some principles one try to achieve regardless of how you structure a demo.

- Theme, the different screens should not feel disjoint

- Flow, the way effects and other elements connect with each other over time

- Drama, how your various elements are spread out over the execution of the demo

In order to get all of this right you want some kind of way to quickly rearrange your screens and/or elements on the screen. Some people develop complete story boards for their demos, using similar approach as if you would develop a movie. Others just toss their stuff together. I suppose we normally end up somewhere in the middle. As I’ve written in previous parts of this series we are primarily driven by the code and the effects produced by the programmers. This time we used PowerPoint to structure a rough timeline of the demo. The PowerPoint was mainly addressing the theme.

However, the most valueable help was the demo itself and the collaboration tools. Our tool chain enabled us to very quickly move pieces around and change the flow of things. We could also render a movie (AVI) of single parts and the whole demo itself. This made it very easy to exchange ideas and change the flow and drama.

#Demosystem In order to connect all the dots and make it quick to develop the various screens in the demo we use a small shell in which the part/screen execution is handled. Each screen in the demo follows a very simple paradigm.

- Init

- Execute

In code this looks like:

static GOA_PIXMAP8 *part_render(IGOADemoSystem *dsys, GOA_PIXMAP8 *targetbuffer, float t) {

// render you effect here

}

static int part_initialize(IGOADemoSystem *dsys, GOA_PIXMAP8 *targetbuffer) {

// Initialize (allocate/load/precalc) here

}

DSYS_PART part_hexagon = {

"hexagon",

0, // flags

part_initialize,

part_render,

};

#ifndef __DSYS_DEMO_BUNDLE__

int main(int argc, char **argv) {

dsys_parse_args(argc, argv);

dsys_run_single(&part_hexagon);

}

#endifUnless all parts are bundled together (__DSYS_DEMO_BUNDLE__) they are compiled as standalone executeables. There are two functions being used:

dsys_parse_args(argc, argv)dsys_run_single(&part_hexagon)

Parse args makes it possible to switch on/off certain features in dsys (like movie/data recording) - and it’s completely optional - if you don’t call it defaults will be used. The run single is a specific function to initialize and run the part. It will set up the display and enter the rendering loop where part_render will be called.

The actual demo bundle main use a slightly different way of dealing with this.

// Actual code from Dark Goat Rises

int main(int argc, char **argv) {

dsys_parse_args(argc, argv);

// Register parts

dsys_register_part(&part_intro);

dsys_register_part(&part_tentacle);

dsys_register_part(&part_harmonics);

dsys_register_part(&part_lsys);

dsys_register_part(&part_metalines);

dsys_register_part(&part_rectunnel);

dsys_register_part(&part_hexagon);

dsys_register_part(&part_fspic);

dsys_register_part(&part_csg);

dsys_register_part(&part_endtro);

dsys_register_part(&part_scroller);

dsys_register_part(&part_landscape);

// Run demo

dsys_run_demo();

}First all parts are registered with the demo system engine and then the bundle run function is called (as opposed to the single part execution function earlier).

The dsys_run_demo() function will first initialize all parts, then change display mode, start music/timers and begin the rendering. There is a bit more context (music, synchronization streams, etc..) required during demo execution as compared to single part execution.

#Cross platform The Demosystem has two types of API’s

IGOADemoSysteminterface exposed to the partsdsys_priv_xxxbunch of private functions that most be implemented by the hosting platform

##IGOADemoSystem - for parts During part initialization and rendering the parts are given an interface pointer exposing certain features

typedef struct {

GOA_DSYS_HANDLE (*get_synctrack)(const char *name);

double (*get_syncvalue)(GOA_DSYS_HANDLE track);

int (*get_partstate)();

int (*set_palette)(int flags, void *param);

void (*fast_clear)(void *ptrData, uint32_t value, int bytes); // deprecated

void (*fast_copy)(void *ptrDest, void *ptrSrc, int bytes); // deprecated

int (*is_recording)();

} IGOADemoSystem;NOTE: The clear/copy routines got deprecated over the course of the development.

This proved sufficient for our needs. The sync track is essentially a Rocket track. It is created the first time you call get_synctrack. Part state is not quite needed. It is an implicit rocket sync track created by demo-system to control visibility.

You can fetch the part state and use it as a “singal” of sorts. This avoids an additional track in many cases. The is_recording function returns TRUE/FALSE depending on if the demosystem is recording data (i.e. line/polygon streaming recording is activated) as this requires a part to use special line/polygon functions.

A part which relies on recording would basically do the following:

static int part_initialize(IGOADemoSystem *dsys, GOA_PIXMAP8 *targetbuffer) {

// We need our own backbuffer, otherwise we could reuse the global one

backbuffer = goa_pixmap_create_8bit(320,180,GOA_PIXMAPCREATE_CLEAR | GOA_PIXMAPCREATE_ZBUFFER);

// Create a 3d frustum with for our backbuffer

frustum = goa_frustum_create((GOA_PIXMAP *)backbuffer, backbuffer->width, backbuffer->height, backbuffer->width, GOA_FRUSTUMCREATE_MATERIAL);

if (dsys->is_recording()) {

// If recording line/polygon streaming use poly/line routines with recording ability

goa_frustum_setpolyfun(frustum, gnk_drawpoly_refz_rec, NULL);

goa_frustum_setlinefun(frustum, gnk_drawline_refz_dda_rec, NULL);

} else {

// Not recording, use reference drawing

goa_frustum_setpolyfun(frustum, gnk_drawpoly_refz, NULL);

goa_frustum_setlinefun(frustum, gnk_drawline_refz, NULL);

}

// additional stuff goes here

}##Dsys private implementation This is just an implicit API resolved during link time. The platform layer has to implement a certain set of functions. This functionality could also have been implemented using the pre-processor. But the separation was a bit easier to maintain this way.

API looks like:

int dsys_priv_initialize(int useDatFile);

int dsys_priv_run_single(IGOADemoSystem *dsys, DSYS_PART_PRIVATE *part, FILE *stream, int maxframes, int targetFrameRate);

void dsys_priv_run_demo(IGOADemoSystem *dsys, GOA_LIST *partlist, struct sync_device *rocket);

GOA_PIXMAP8 *dsys_priv_getbackbuffer();

double dsys_frame_music_row;

void dsys_priv_record_demo(IGOADemoSystem *dsys, GOA_LIST *partlist, struct sync_device *rocket, FILE *stream, int targetFrameRate, int maxframes);

// deprecated and not used

void dsys_fastclear(void *ptrData, uint32_t value, int bytes);

void dsys_fastcopy(void *ptrDest, void *ptrSrc, int bytes);The platform independent layer will call these functions when neccessary. The platform dependent layer will have to deal with any OS specific code, like setting up the correct GOA display drivers, start timers, etc..

The whole demo system implementation got quite messy over time as we added more and more features. There are a lot of things that would need a proper refactoring. However, it was hidden and worked pretty fine. Also, we usually rewrite this kind of code for each demo as there are specific requirements and/or changes in tool chain invalidating the system. Therefore we did not spend much time nor effort on keeping it clean.

What worked really well was the interface towards the parts. I think we had a very simple but yet powerful framework where it was easy and fun develop parts.

#Synchronization To get the flow and drama in place a demo must work with the music. If the music is up-tempo the effects must respond. If the tempo goes down the effects must match - slower paced effects, perhaps just a still image.

We used Rocket (see: https://github.com/rocket/rocket) to control flow and drama.

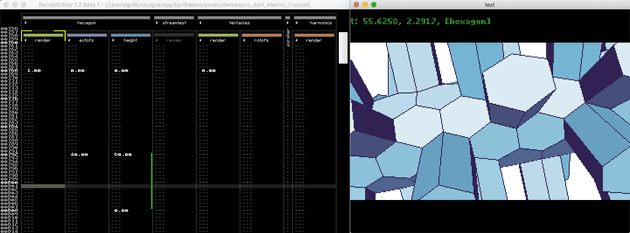

Rocket is built like a sequential tracker. Tracks can be grouped together by means of name mangling. In our case the name mangling is the part-name (from the registration) and the track name (implicitly defined through “get_synctrack” in the demosystem interface). The implicit render state track is visible as ‘render’ in the image. This track is created by the demosystem in order to control which part to render. We can only handle rendering ONE part at the time. Mainly a decision taken because the Amiga has limited power.

The editor is a 3rd party tool written by Emoon/TBL, it’s available here: https://github.com/emoon/rocket

The Rocket base code is very simple and effective. We just did some changes to the serialization to support loading through GOA and get automatic little/big endian conversion of values.

Through the editor and Rocket we are able to run the demo, changing values on the fly and watch the results in realtime. This was very efficient and worked very well. Again, quick turnaround is the key!